ColBERT

Efficient and Effective Passage Search via Contextualized Late Interaction over BERT

Introduction- where ColBERT fits

Vector search has experienced explosive growth in recent years, especially after the advent of large language models (LLMs). that employ embedding-based representations of queries and documents and directly model local interactions (i.e., fine-granular relationships) between their contents. This popularity has prompted a significant focus in academic research on enhancing embedding models through expanded training data, advanced training methods, and new architectures. However, these new embedding-based representations come at a steep computational cost.

Another problem with traditional Encoder models like the all-miniLM, OpenAI embedding model, and other encoder models is that they compress the entire text into a single vector embedding representation. These single vector embedding representations are useful because they help in the efficient and quick retrieval of similar documents. However, the problem lies in the contextuality between the query and the document. The single vector embedding may not be sufficient to store the contextual information of a document chunk, thus creating an information bottleneck.

A third difficulty in retrievel is that off-the-shelf models struggle with unusual terms, including names, that are not commonly in their training data. As such they also tend to be hypersensitive to chunking strategy, which can cause a relevant passage to be missed if it’s surrounded by a lot of irrelevant information. All of this creates a burden on the application developer to “get it right the first time,” because a mistake usually results in the need to rebuild the index from scratch.

To reconcile efficiency and contextualization in information retrieval (search), the novel model ColBERT (and ColBERTv2), a ranking model based on contextualized late interaction over BERT, has been proposed by researchers at Stanford.1

BERT

”ColBERT” stands for Contextualized Late Interaction over BERT, and leverages the deep language understanding of BERT while introducing a novel interaction mechanism. BERT, short for Bidirectional Encoder Representations from Transformers, is a language model based on the transformer architecture and excels in dense embedding and retrieval models. Unlike traditional sequential natural language processing methods that move from left to right of a sentence or vice versa, BERT grasps word context by analyzing the entire word sequence simultaneously, thereby generating dense embeddings.

One might initially assume that incorporating BERT’s deep contextual understanding into search would inherently require significant computational resources, making such an approach less feasible for real-time applications due to high latency and computational costs. However, ColBERT challenges and overturns this assumption through its innovative use of the late interaction mechanism. Further, unlike BERT, which consolidates token vectors into a singular representation, ColBERT maintains per-token representations, offering a finer granularity in similarity calculation.

What is late interaction in ColBERT?

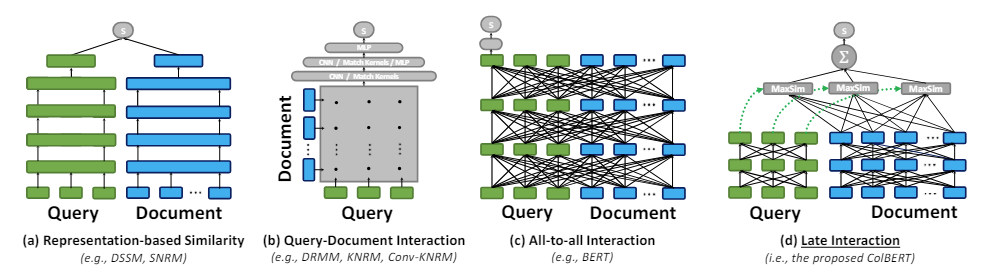

“Interaction” refers to the process of evaluating the relevance between a query and a document by comparing their representations. “Late interaction” is the essence of ColBERT. The term is derived from the model’s architecture and processing strategy, where the interaction between the query and document representations occurs late in the process, after both have been independently encoded. This contrasts with “no interaction” and “cross encoder” models.

No interaction models, also known as representation-based models, encode queries and documents into single-vector representations and rely on simple similarity measures like cosine similarity to determine relevance. This approach often under performs compared to interaction-based models because it fails to capture the complex nuances and relationships between query and document terms. The aggregated embeddings for documents and queries struggle to provide a deep, contextual understanding of the terms involved, which is crucial for effective information retrieval.

Cross encoders process pairs of queries and documents together, feeding them into a model (such as BERT) to compute a single relevance score for each pair. While cross encoders can be highly accurate due to their ability to consider the full context of both the query and the document simultaneously, they are less efficient for large-scale tasks. This inefficiency stems from the computational cost of evaluating every possible query-document pair, making them impractical for real-time applications or when dealing with large document collections.

Late interaction models like ColBERT optimize for efficiency and scalability by allowing for the pre-computation of document representations and employing a more lightweight interaction step at the end, which focuses on the already encoded representations. This design choice enables faster retrieval times and reduced computational demands, making it more suitable for processing large document collections. Specifically, a batch dot-product is computed between the query token embeddings and document token embeddings to generate term-wise similarity scores. Max-pooling is then applied across document terms to find the highest similarity for each query term, and these scores are summed across query terms to derive the total document score. This approach allows for the pre-computation of document representations, significantly speeding up query processing.

In Practice

ColBERT takes in a query or a chunk of a Document / a small Document and creates vector embeddings at the token level. That is each token gets its own vector embedding, and the query/document is encoded to a list of token-level vector embeddings. The token level embeddings are generated from a pre-trained BERT model hence the name BERT. These are then stored in the vector database. Now, when a query comes in, a list of token-level embeddings is created for it and then a matrix multiplication is performed between the user query’s embedding tokens and each document’s embedding tokens, thus resulting in a matrix containing similarity scores. The overall similarity is achieved by taking the sum of maximum similarity across the document tokens for each query token:

Here in the above equation, we see that we do a dot product between the Query Tokens Matrix (containing N token level vector embeddings)and the Transpose of Document Tokens Matrix (containing M token level vector embeddings), and then we take the maximum similarity cross the document tokens for each query token. Then we take the sum of all these maximum similarities, which gives us the final similarity score between the document and the query. The reason why this produces effective and accurate retrieval is, that here we are having a token-level interaction, which gives room for more contextual understanding between the query and document.

Here it is in pseudo code:

def maxsim(qv, document_embeddings):

return max(qv @ dv for dv in document_embeddings)

def score(query_embeddings, document_embeddings):

return sum(maxsim(qv, document_embeddings) for qv in query_embeddings)

As we are computing the list of embedding vectors before itself and only performing this MaxSim (maximum similarity) operation during the model inference, thus calling it a late interaction step, and as we are getting more contextual information through token level interactions, it’s called contextual late interactions. Thus the name Contextual Late Interactions BERT or ColBERT. These computations can be performed in parallel, hence they can be computed efficiently. Finally, one concern is the space, that is, it requires a lot of space to store this list of token-level vector embeddings. This issue was solved in the ColBERTv2, where the embeddings are compressed through the technique called residual compression, thus optimizing the space utilized.

Practicality for Real-World Applications

Experimental results underline ColBERT’s practical applicability for real-world scenarios. Its indexing throughput and memory efficiency make it suitable for indexing large document collections like MS MARCO within a few hours, retaining high effectiveness with a manageable space footprint. These qualities highlight ColBERT’s suitability for deployment in production environments where both performance and computational efficiency are paramount.

-

Khattab et al, https://arxiv.org/pdf/2004.12832. ↩