Chunking in RAG

Chunking strategies for increasing effectiveness of RAG systems

Introduction

Language models have become an important and flexible building block in a variety of user-facing language technologies, including conve2024-02-05rsational interfaces, search and summarization, and collaborative writing. These models perform downstream tasks primarily via prompting: all relevant task specification and data to process is formatted as a textual input context, and the model returns a generated text completion. These input contexts can contain thousands of tokens, especially when language models are used to process long documents (e.g., legal or scientific documents, conversation histories, etc.) or when language models are augmented with external information.

Existing language models are generally implemented with Transformers, which require memory and compute that increases quadratically in sequence length. As a result, Transformer language models were often trained with relatively small context windows (between 512- 2048 tokens)1. Despite relatively small context windows, it has been observed that language models still struggle to robustly access and use information in their input contexts.2

This finding is pertinent for retrieval augmentation generation (RAG) pipelines, who’s context is critical for successful inference. However, when designing such a system, one should not begin by focusing on the final stage in a pipeline, namely if the model is successfully using the context provided, but rather start at the beginning of said pipeline. This follows the well known data science moniker: garbage in leads to garbage out. This leads us to the topic of this post, and the first preprocessing step in any RAG pipeline: chunking.

What is chunking?

Chunking is how you break up your own data as part of a RAG pipeline so it can be 1) stored & retrieved effectively and 2) provide accurate and prudent context to an LLM at inference. Unlike fine-tuning, RAG enables an LLM to work with your own data (data that is has not been trained on)

Why Chunk?

- LLMs have limited context window

- Not realistic to provide all data to LLM at once

- You will want to feed the LLM only relevant information at inference time

Considerations

- Length of text

- books, academic articles

- Short text (smaller chunks)

- customer review, social media

- Understand embedding model so it is compatible with our chunk length for token size limits

- What is our use case? Short Q&A or are longer responses desired

- Just because a model can take a larger context window, doesn’t mean it should be given more tokens as context (memory constraints and latency, compute cost)

Chunking Strategies

Naive Chunking

- Developer-defined fixed number of characters

- Can set a chunk overlap or a delimiter

- It is fast and efficient but it does not account for document structure (headings, sections, etc.)

from langchain.text_splitter import CharacterTextSplitter

splitter = CharacterTextSplitter(

chunk_size=100

chunk_overlap=10

separator="\n\n"

)

chunks = splitter.create_documents([text])```

### NLP-Driven Sentence Splitting

LangChain integrations with NLP frameworks *NLTK* and *SpaCy*

who have powerful sentence segmentation features.

```python

from langchain.text_splitter import NLTKTextSplitter, SpacyTextSplitter

#nltk

splitter = NLTKTextSplitter()

chunks = splitter.split_text(text)

#SpaCy

splitter = SpaCyTextSplitter()

chunks = splitter.split_text(text)

Recursive Character Text Splitter

A langchain method that allows you to define chunk size and overlap, but it addition, it will recursively split text according to text type using user-defined text splitters. In other words, it involves document structure into how the splitting is done. It seeks to chunk it recursive fashion by breaking down text according to the larger separator (eg. double new line), to the smaller (ex. characters.)

For example, if our chunk_size is 500 but we have a continuous paragraph of 600, the recursive splitter will split the 600 character paragraph into smaller units -> paragraph -> sentence -> word -> character to maximize the amount it could fit into the allotment of 500. On the other hand, if we had a paragraph with only 400 characters, the splitter would cut the chunk at 400 to maintain congruity rather than adding an additional 100 characters from the next paragraph to reach the 500 character limit.

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=0)

pages = [p.page_content for p in text_splitter.split_documents(doc)]

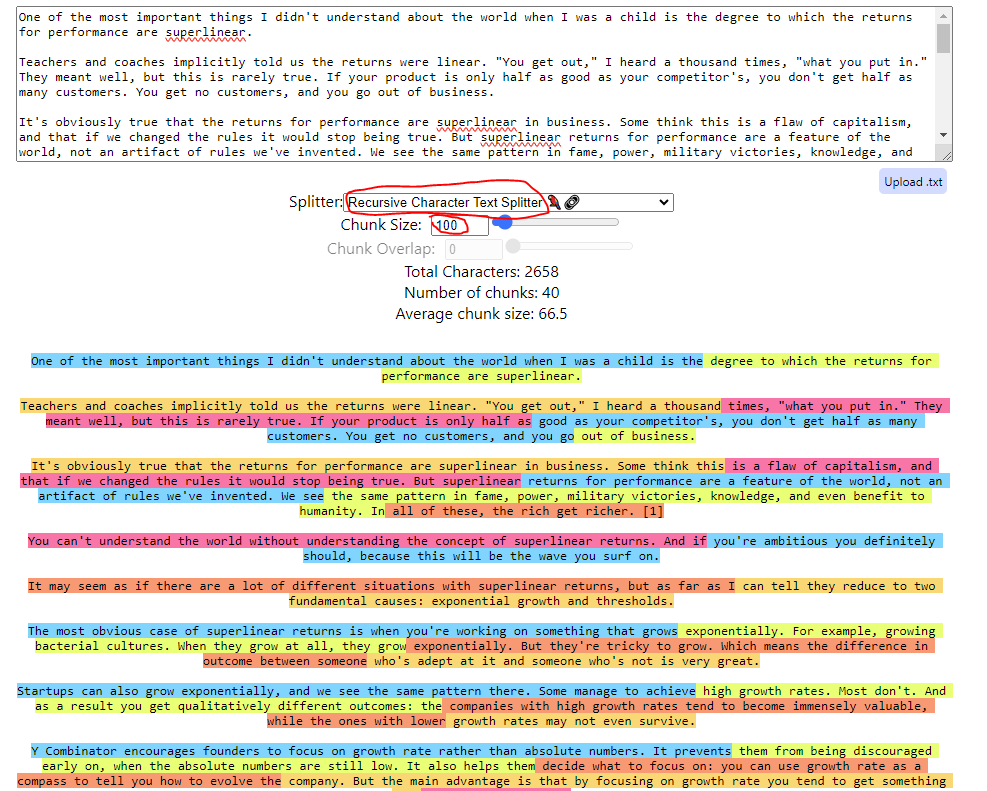

To demonstrate the usefulness of recursive chunking, lets use the amazing tool at [chunkviz.com] to show how increasing chunk size leads to clean separation of paragraphs. We set the splitter to the recursive splitter, and start with a chunk size of 100.

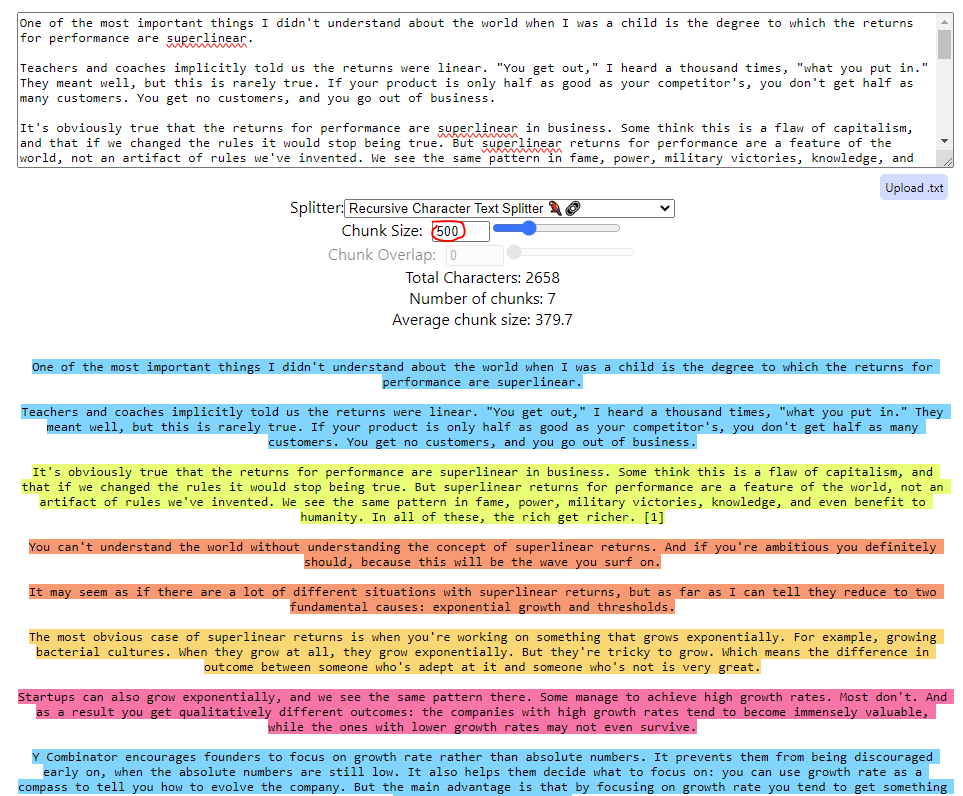

This does not lead to a great semantic split. However, when we increase chunk size to 500, we get a different effect:

Now we are getting clear semantic chunking of paragraphs, which will lead to more unique and uniform context vectors for our LLM at retrieval and inference.

Structural Chunking

Structure chunking can be used in tandem with previously mentioned chunking methods to split HTML or markdown documents based on headers and underlying subsections.

headers = {

("h1": "Header 1"),

("h2": "Header 2"),

("h3": "Header 3"),

("h4": "Header 4")

}

html_splitter = HTMLHeaderTextSplitter(headers_to_split_on=headers)

header_splits = html_splitter.split_text_from_url(url)

splitter = RecursiveCharacterTextSplitter(

chunk_size=800,

overlap=40

)

chuchunks = splitter.split_documents(header_splits)

Semantic Chunking

Usually, we try and chunk entire paragraphs and summarize or embed them because we assume it will contain uniform information. But what if this is more than one semantic meaning or message in a paragraph. Shouldn’t we take into account the actual content? This is where semantic chunking comes in. This method is more intensive.

Its like the difference between arranging books on a shelf by size (our previous chunking methods), verses arraigning them by content (where you have to actually read the contents and understand them).

For implementation, Llamindex has SemanticSplitterNodeParse class that allows to split the document into chunks using contextual relationship between chunks. This adaptively picks the breakpoint in-between sentences using embedding similarity.

NER Chunking

Agent-based Chunking

Summarization

This is good for large documents that cannot fit in an LLM’s context window, for example articles or book chapters. Langchain has something called summarization chains which allow for summarization documents via an LLM, prior to later feeding that summary to another LLM at inference. It does so by building a running summary of a document by summarizing sequential chunks and iteratively building a summary of the entire document by combining the summary of all the chunks.

from langchain.chains.summarize import load_summarize_chain

from langchain.chat_models import ChatOpenAI

from langchain.text_splitter import CharacterTextSplitter

llm = ChatOpenAI(temperature=0, model_name="gtp-3.5-turbo-16k")

splitter = CharacterTextSplitter(

separator="\n",

chunk_size=800,

overlap=80

)

#chunk up large document

chunks = splitter.create_documents(docs)

chain = load_summarize_chain(llm, chain_type='refine')

summary = chain.run(chunks)

Chunk Decoupling

This is a way to provide a lot of context to an LLM, like in the form of an entire document, while minimizing storage for a vector DB as well as lookup latency.

In short, this means smaller texts for retrieval, and larger texts for generation.

The steps involve:

- summarize and embed documents

- link summaries to raw document

- retrieve relevant summaries from vector DB using the user’s query

- Pass the linked document of the summary with the highest similarity to the LLM instead of just the corresponding summary.

A similar method called sentence text windows can also be applied. Here, a document is chunked and embedded at a sentence level. When a relevant sentence is retrieved from the vector DB, it is passed to the LLM within a larger window surrounding that sentence to provide additional context to the LLM.

See this jupyter notebook on my github for a look at semantic chunking: https://github.com/jackhmiller/LLMs/blob/main/Chunking_Text_Splitting/SemanticChunker.ipynb

-

Tokenization is the process of splitting the input and output texts into smaller units that can be processed by the LLM AI models. Tokens can be words, characters, subwords, or symbols, depending on the type and the size of the model. A token is typically not a word; it could be a smaller unit, like a character or a part of a word, or a larger one like a whole phrase. In the GPT architecture, a sequence of tokens serves as the input and/or output during both training and inference (the stage where the model generates text based on a given prompt). For example, in the sentence “Hello, world!”, the tokens might be “Hello”, “,”, “ world”, “!” depending on how the tokenization is performed. ↩

-

Liu, N. F., Lin, K., Hewitt, J., Paranjape, A., Bevilacqua, M., Petroni, F., & Liang, P. (2023). Lost in the Middle: How Language Models Use Long Contexts. https://arxiv.org/abs/2307.03172. ↩